AI Moderated Research on Research: AIMIs vs. Static Online Surveys

By Glaut

- article

- AI

- Artificial Intelligence

- AI Moderated Interviews

- Agile Quantitative Research

- Agile Qualitative Research

- Automated Survey Research

- Audience/Consumer Segmentation

- Behavioural Analytics

- Online Surveys

Research methodologies evolve when new tools emerge that solve problems that old ones couldn’t. Twenty-five years ago, online surveys transformed data collection by enabling scale. Today, AI-moderated interviews (AIMIs) are introducing a different kind of shift – one that addresses persistent challenges in gathering meaningful open-ended responses. The question isn’t whether AIMI replaces existing methods, but rather what researchers gain when they understand how AIMI works and where it adds value.

To answer this question rigorously, we at Glaut launched our Research on Research programme – an initiative designed to provide independent researchers, firms, and institutions with free access to AIMI platforms and panel costs whilst maintaining complete autonomy over study design, fieldwork, and analysis. Our programme’s goal is to build a transparent, scholarly collection of findings that the market research community can analyse, use, reproduce, and build upon.

The study presented here compares AIMIs with static online surveys, revealing how conversational interaction shapes the depth, quality, and usability of open-ended responses. Review the study in detail here.

Understanding the Research Question

Open-ended questions have always been the cornerstone of qualitative insight, yet they’ve remained a persistent challenge in survey research. Respondents often provide brief, low-effort answers to open-ended prompts, forcing researchers to spend significant time cleaning sparse data and extracting meaning from minimal text. AIMIs address this challenge through conversational interaction. Rather than presenting static questions in sequence, an AIMI conducts interviews in a conversational format with follow-up questions based on previous answers.

With these differences in mind, Aylin Idrizovski and Dr. Florian Stahl, Chair of Quantitative Marketing & Consumer Analytics, from the University of Mannheim, conducted an independent study titled “AI-Moderated Interviews vs. Static Online Surveys: A Comparative Study on Qualitative Response Quality”, via our platform, to compare the quality of responses collected via AIMI against traditional static surveys.

To isolate the effect of the collection method, the researchers kept all other elements constant – the topic, the questions, and the sample size – and varied only the format. One group of 100 respondents answered via AIMI, whilst another group of 100 answered via static online surveys. This design meant any differences in responses would stem directly from the collection method itself.

The Volume and Quality of Responses

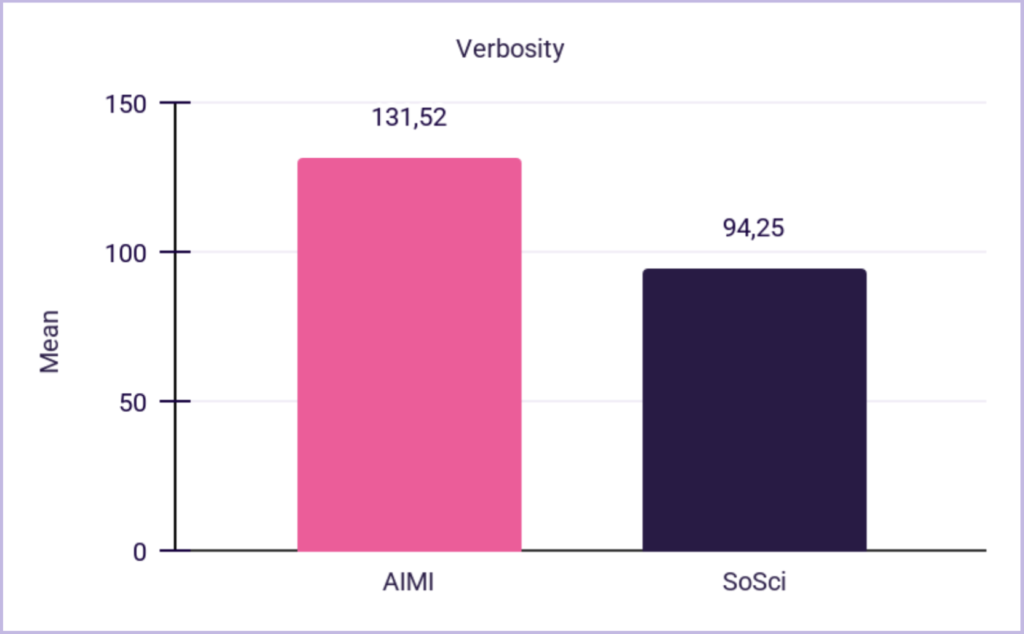

The differences emerged immediately when examining response volume. Respondents using AIMIs produced approximately 39% more words than those using static surveys (131.5 words versus 94.3 words). Yet volume alone doesn’t tell the full story.

Verbosity

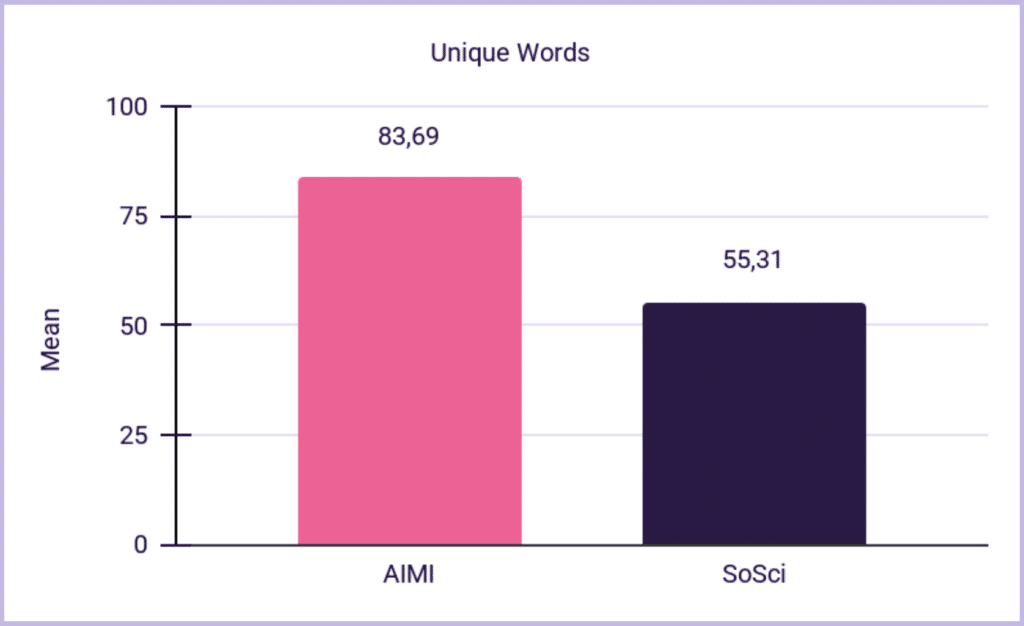

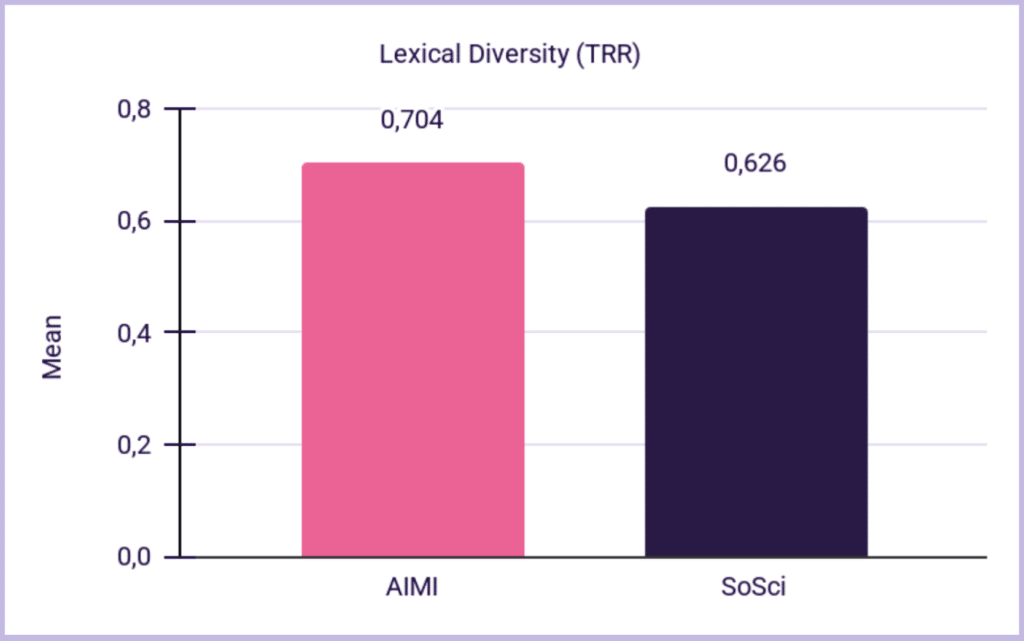

The quality of language improved significantly as well. AIMI responses contained roughly 51% more unique words, indicating that respondents drew from a broader vocabulary. More tellingly, AIMI responses demonstrated higher lexical diversity – measured by a metric called Type-Token Ratio (TTR) – of 0.704 compared to 0.626 in static surveys. Lexical diversity matters because it indicates that respondents are expressing ideas with greater nuance and drawing from a richer linguistic range.

Unique Words

Lexical Diversity

Thematic Breadth and Depth

Beyond language metrics, the breadth of ideas respondents explored differed substantially. AIMI responses generated approximately 36% more unique themes (8.76 versus 6.42), indicating that respondents addressed more distinct topics and dimensions of each question when given the opportunity for conversational interaction. This expansion of thematic coverage has direct implications for analysis: researchers coding and analysing responses have more material to work with and encounter a fuller picture of how respondents think about the subject matter.

Data validity also improved notably. The AIMI format produced cleaner data, with zero gibberish responses, whilst static surveys contained 10% gibberish. This reduction in throwaway responses means researchers spend less time filtering data during the cleaning phase and can focus their analytical effort on substantive responses.

The Participant Experience Factor

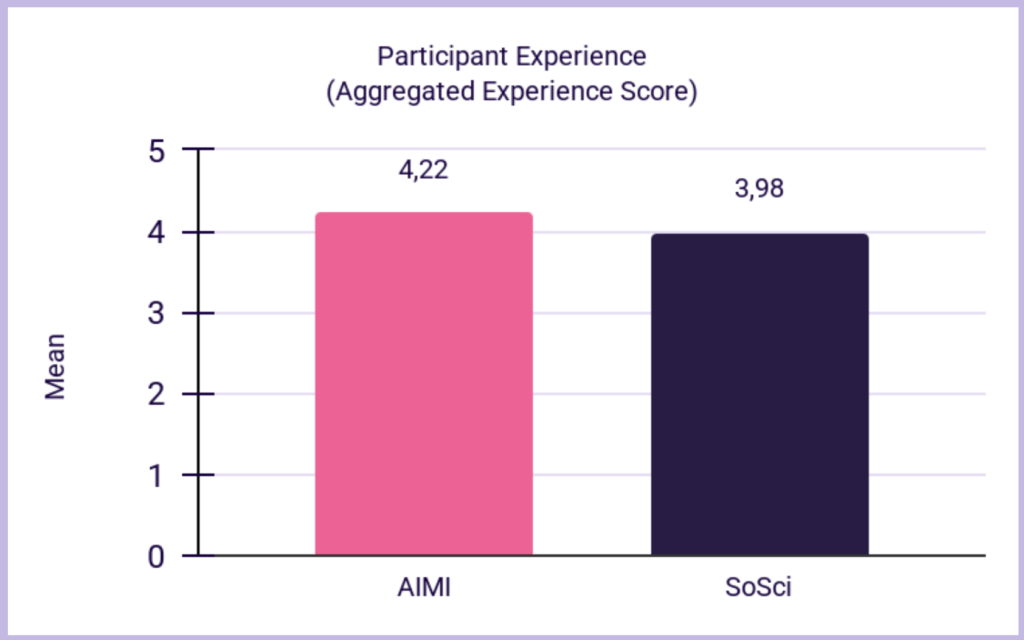

Beyond measurable response quality, how respondents experienced the two formats differed as well. Participants reported higher overall satisfaction with AIMIs, with satisfaction scores 6% higher for this format (4.22 versus 3.98 on the static survey), a statistically significant difference (p = .02, d = 0.33). They also described a stronger sense of conversational flow, felt more understood by the process, and reported higher trust in how their data would be handled.

Participant Experience

Respondents also perceived AIMIs as less repetitive and expressed a higher likelihood of recommending the format to others. These experience metrics matter because research fatigue is a recognised challenge in survey research. When respondents feel heard and engaged, they’re more likely to provide thoughtful answers and participate in future studies – benefits that extend beyond any single research project.

What This Means for Researchers

These findings demonstrate that an AIMI doesn’t replace survey methodology, it enhances what surveys can capture in the open-ended component. For researchers designing studies, the evidence suggests that AIMIs function most effectively as a tool for deepening open-ended data and broadening thematic coverage.

The practical advantages are tangible. The reduction in gibberish and low-effort responses lowers the cleaning burden during analysis. The expansion of thematic coverage and lexical diversity means that open-ended data carries more analytical weight. The improved participant experience also has long-term value for research communities relying on panel participation.

For researchers evaluating AIMIs, the evidence-based insights from our Research on Research programme provide a foundation for making informed decisions – check out the programme for yourself.