AI Moderated Research on Research: Conversational AI vs Traditional Online Surveys

By Glaut

- article

- AI

- Artificial Intelligence

- AI Moderated Interviews

- Agile Quantitative Research

- Agile Qualitative Research

- Automated Survey Research

- Audience/Consumer Segmentation

- Behavioural Analytics

- Online Surveys

Online surveys have been a cornerstone of quantitative research for decades, but the standardisation that makes them scalable often limits the depth of open-ended responses. Conversational AI interfaces are emerging as a solution to this challenge, offering the potential to combine the efficiency of surveys with the richness of qualitative interviews. But do they genuinely improve data quality, or simply produce more words?

To answer this question rigorously, Human Highway conducted an independent study comparing conversational AI with traditional online surveys through Glaut’s Research on Research programme. The findings reveal that AI-moderated conversations don’t just increase response length but fundamentally transform how participants express their thinking. Review the study in detail here.

Understanding the Research Question

Open-ended questions in traditional surveys frequently produce brief, fragmented responses that require substantial cleaning and offer limited analytical depth. The lack of follow-up questions means researchers cannot probe further, clarify ambiguity, or encourage elaboration as they would in qualitative interviews.

Conversational AI interfaces address this limitation by interpreting initial responses in real time and generating tailored follow-up prompts. The addition of voice capability further reduces cognitive friction, allowing participants to express themselves more naturally than when typing.

Human Highway designed a study to isolate the effect of the collection method whilst controlling for all other variables. Two independent surveys were conducted: one using a traditional online questionnaire (503 participants via the OpLine panel) and another using conversational AI (500 participants via the PureSpectrum panel). Both surveys covered the same topic – online reviews in holiday planning – and both groups were weighted by gender, age and geographic area to ensure comparability.

Critically, the AI group could choose their response mode: text only, voice only, or a combination. This self-selection revealed participant preferences: 72% chose text only, 23% chose voice only, and 1% used both modes.

Volume and Informational Richness

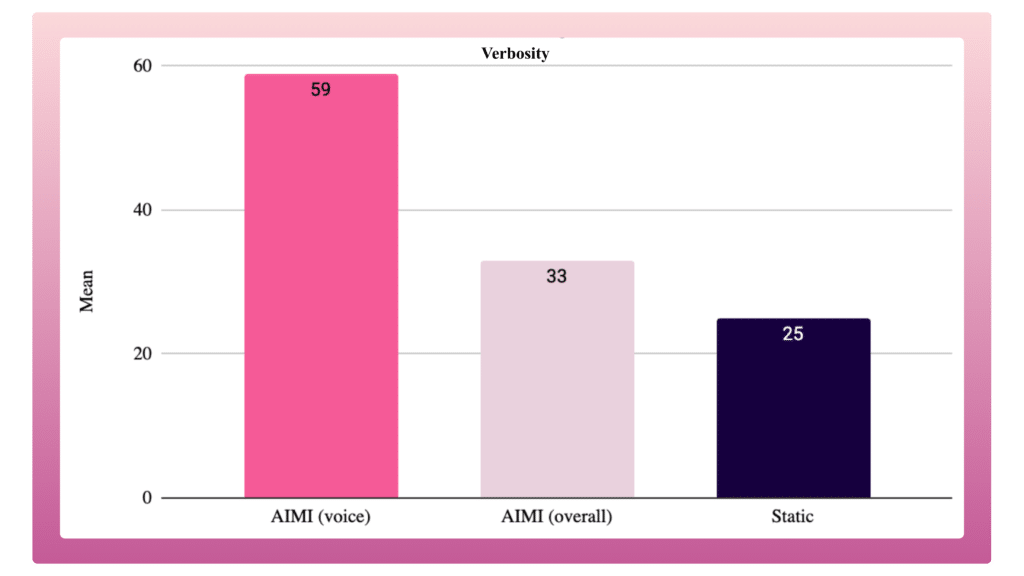

The differences in response volume emerged immediately. AI-moderated responses averaged 32.78 words per response, approximately 30% longer than traditional survey responses (25.25 words). Yet this increase in length translated into genuine informational gain rather than mere verbosity.

AI responses contained 8.55% more distinct lemmas (25.00 versus 23.03), indicating that participants activated a more varied vocabulary. More significantly, they generated 24.11% more distinct concepts (9.73 versus 7.84), demonstrating that respondents addressed a broader range of ideas when given conversational prompts.

The voice modality amplified these effects further. Voice responses averaged 58.65 words – more than double the traditional mode – with 38 distinct lemmas and 13.36 distinct concepts. This suggests that spoken interaction removes the cognitive interruption imposed by typing, enabling participants to develop their reasoning more fluidly and naturally.

Verbosity

Semantic Cohesion and Argumentative Depth

Beyond volume, the structural quality of responses differed substantially. Semantic cohesion – the degree to which sentences connect to form coherent meaning – increased from 0.213 in traditional surveys to 0.338 in AI mode, approximately 59% higher. This difference exceeded the 95% confidence margin, confirming statistical robustness.

In voice-only mode, semantic cohesion reached 0.558, more than double the traditional mode. Voice responses resembled short speeches rather than isolated statements, with concepts linked logically and arguments developed across multiple sentences.

Argumentative depth – the ability to explain claims, articulate cause-and-effect relationships and provide examples – also improved significantly. AI responses scored 2.40 compared to 1.86 in traditional surveys, a 29% increase. Voice responses reached 3.10, nearly 67% higher than traditional mode, indicating that spoken responses more frequently included justifications, comparisons and evaluation criteria.

Thematic Stability

A critical concern when introducing new methodology is whether it introduces thematic bias. The researchers coded all open-ended responses using the same thematic framework to assess whether AI moderation altered the nature of opinions expressed.

The analysis revealed no new or unique themes in either group. The same set of themes appeared across traditional and AI modes with comparable relative importance. Minor variations in ranking reflected differences in emphasis rather than structural changes in content.

This finding addresses a fundamental methodological concern: AI moderation does not distort what people think, it changes how fully they express it. The thematic structure remains stable whilst the articulation becomes richer, more contextualised and more analytically useful.

Participant Experience

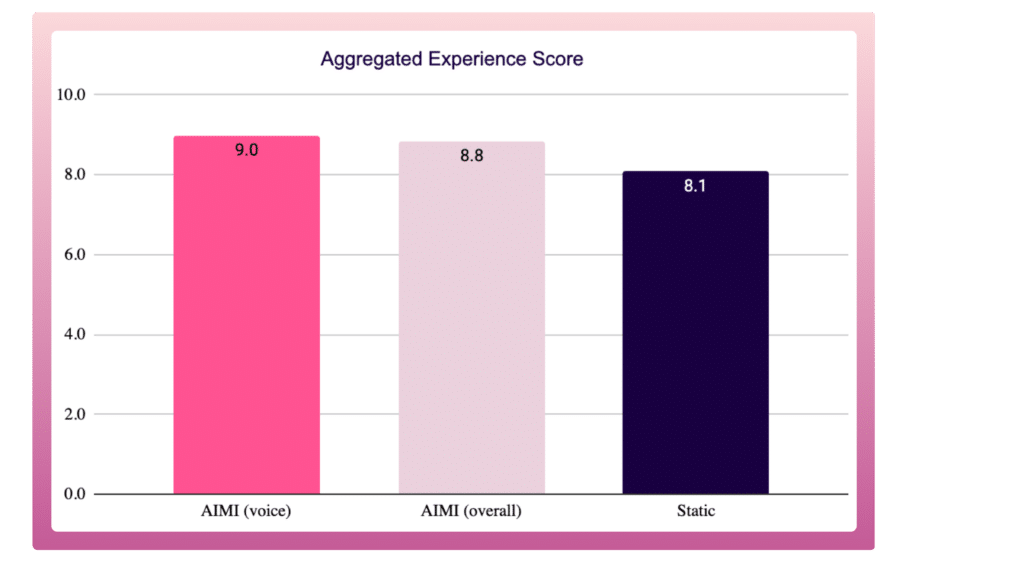

The experiential dimension of AI-moderated surveys differed markedly from traditional questionnaires. Participants rated their overall experience significantly higher for AI (8.84 versus 8.09 on a 10-point scale), with 85.6% assigning ratings of 8 or higher compared to 69.1% in traditional surveys.

Specific experiential measures revealed consistent advantages. AI participants felt more at ease (89.8% versus 80.4%), found the experience more enjoyable (89.8% versus 70.0%), felt more listened to (86.6% versus 67.5%), and found it easier to answer questions (92.6% versus 75.7%).

Notably, negative indicators decreased: only 16.9% of AI participants reported not enjoying the questionnaire compared to 19.9% in traditional mode, and only 25.6% felt unable to express themselves well compared to 32.6%.

The voice modality showed further improvements, with 92.9% feeling at ease and only 12.3% reporting they did not enjoy the experience. These experiential improvements have methodological implications: more comfortable participants are likely to provide more thoughtful, accurate responses and participate more willingly in future research.

Participant Experience

Qualitative Patterns

Qualitative analysis of response content confirmed that AI prompting encouraged explicit reasoning. Traditional survey responses frequently listed attributes without explanation, whilst AI responses – particularly voice responses – included justifications, examples, conditions and edge cases.

Voice responses displayed a narrative quality absent from typed responses. Participants recounted specific episodes, mentioned exceptions and provided contextual details that enriched understanding. This narrative richness brings quantitative surveys closer to the discourse quality of qualitative interviews whilst maintaining standardisation and scale.

Signals of cognitive fatigue – vague responses, “I don’t know” statements and disconnected words – appeared more frequently in traditional surveys. AI interaction mitigated these signals, encouraging participants to reformulate initially vague answers into clearer, more detailed expressions.

Implications for Research Practice

The evidence demonstrates that conversational AI introduces a structural change in how open-ended survey data is collected and expressed. The benefits extend beyond increased word counts to encompass richer vocabulary, greater conceptual diversity, higher semantic cohesion, deeper argumentation and improved participant experience.

For researchers designing studies, conversational AI functions most effectively as a tool for enhancing open-ended data without altering thematic content. The voice capability offers particular promise for reducing cognitive friction and enabling more natural expression, though text-based AI interaction also delivers substantial improvements over static surveys.

The reduction in low-quality responses and cognitive fatigue signals lowers the cleaning burden during analysis. The expansion of thematic coverage and argumentative depth means open-ended data carries greater analytical weight and provides richer material for coding and interpretation.

As voice models and adaptive interviewing capabilities continue to evolve, the gap between AI-moderated and human-moderated qualitative research is likely to narrow further. Researchers who build experience with conversational AI now position themselves to leverage these advances as the technology matures.

For researchers evaluating conversational AI platforms, the evidence-based insights from Glaut’s Research on Research programme provide a foundation for informed decision-making about when and how to integrate these tools into quantitative research workflows – check out the programme for yourself.