One of the key attributes that defines a lean insight team is its ability to iterate at speed.

Build-measure-learn, then return to the start again:

- Build a hypothesis (or concept or campaign or mockup or product or …)

- Measure user reactions (ask or observe … engage them consciously or unknowingly)

- Learn from what they reveal - and go back to step 1.

This is the scientific method.

Nothing new here.

But what is new is the reduced friction and increased speed that today’s insight platforms bring.

They can run continuously, enabling little-and-often feedback cycles - rather than big projects with long timelines.

They reduce the effort and overhead requirements in going back to users.

And they expand the pool of questions that are worth getting customer or user input to: the incremental cost of asking them is now much lower.

In this post, I’ll highlight some of these iterative insight platforms - with examples of how brands are using them - in four main categories:

- Optimisation and A/B testing platforms

- Communities and customer advisory boards

- On-going CX feedback programmes

- On-going usability feedback tools.

1 Optimisation and A/B testing

Optimisation (or really Conversation Rate Optimisation or CRO) is all about increasing the chances that a user will do something on your site or in your app: subscribe, make a purchase, sign up to your blog etc.

Optimisation relies on gathering user feedback, analysing it and then making a change to improve conversion.

One of the most common approaches is A/B and multivariate testing: showing slightly different versions of an interface (booking form, landing page, shopping basket etc) to different groups of users (usually segmented randomly) to find the strongest performer.

At any one time, high traffic platforms and sites will be showing multiple test versions to different sub-sets of users. If you’re reading this on LinkedIn, chances are some of you are unwittingly in a test group right now, and seeing a slightly tweaked version compared to the rest of us.

The beauty of testing in this way is that experiments can run constantly, be replicated with minor changes, and benchmarks can be created over time. Performance inches forward with each iteration.

Even seemingly tiny changes can have a dramatic impact on conversion.

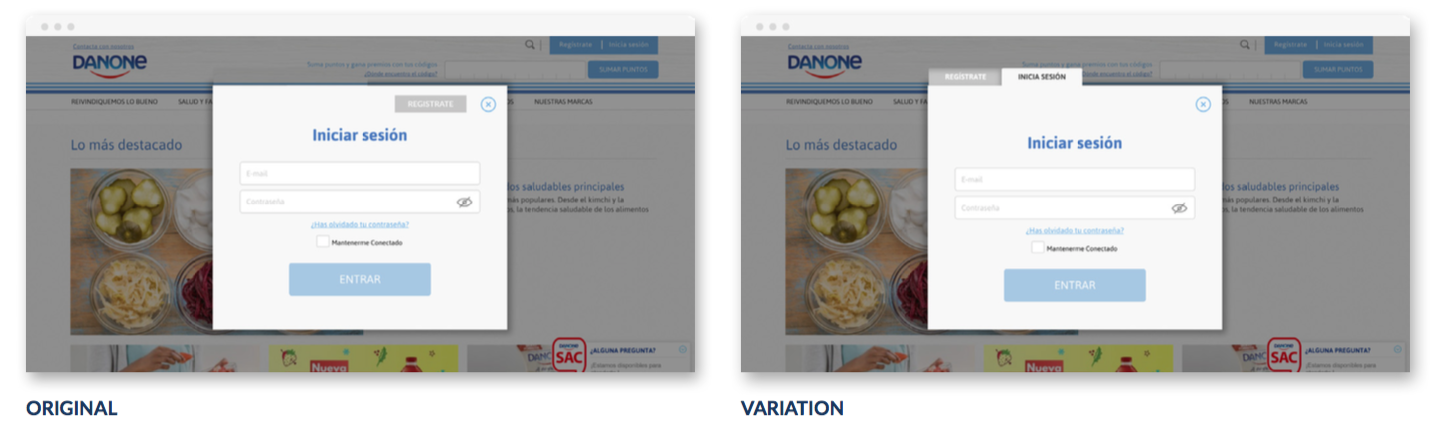

Food company Danone uses the AB Tasty platform for iterative insights on its website. In one case, it suspected its site users were confusing ‘login’ and ‘register’ buttons, and that people were being lost at point of sign-up. It tested a slight variant in design (on the right), and saw logins increase by 78% and registrations increase by 29%.

You can read more AB Tasty case studies here.

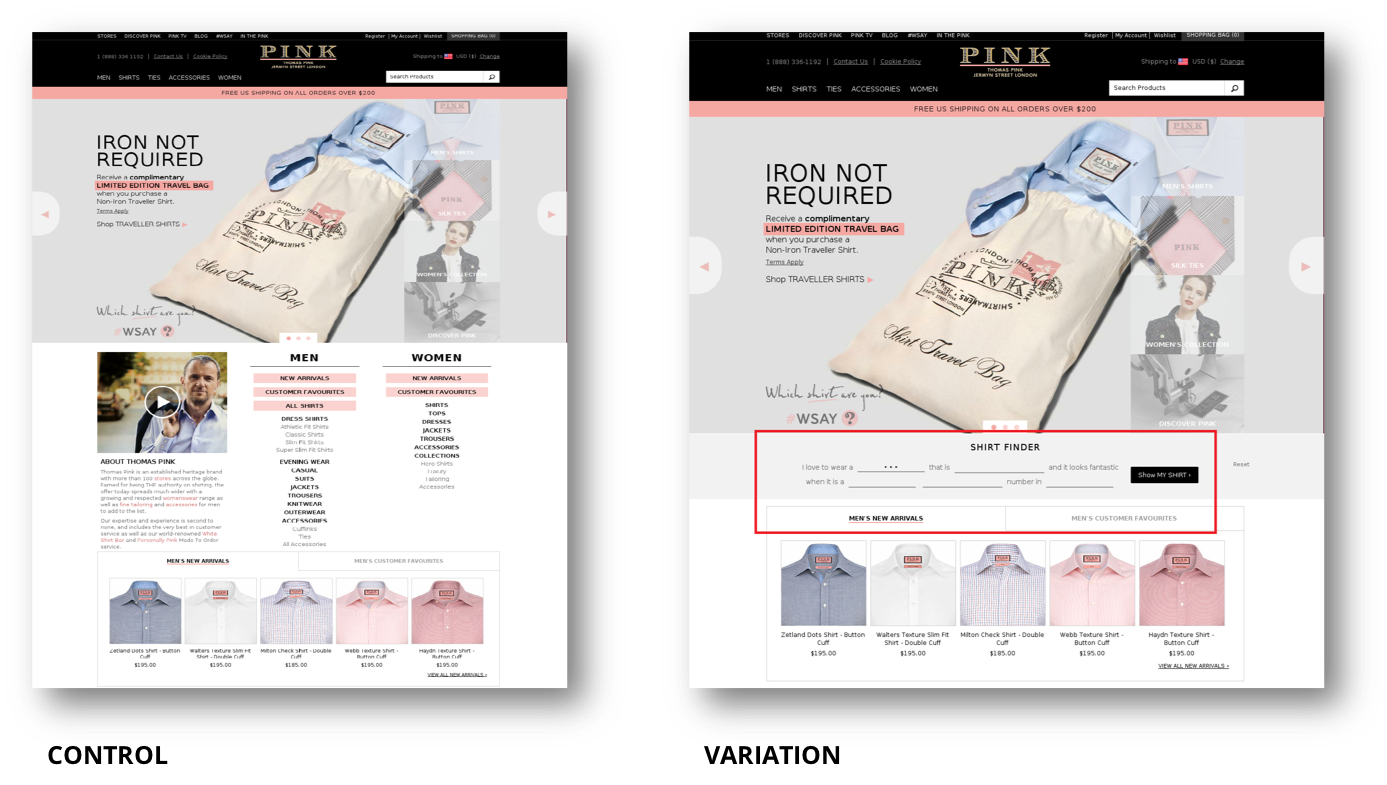

British Shirtmaker Thomas Pink used the VWO platform to test a major change on its website homepage. Using prior insights from analytics, surveys and in-store observations, the team hypothesised that online conversions would increase if they could replicate a particular aspect of the in-store experience: asking sales staff for shirts to suit a specific occasion.

A ‘shirtfinder' widget was given prominent placing on the homepage, with this variant seen by more than 130,000 visitors over 30 days. The result was a 12% increase in order volume and value. You can see the control and test versions here:

You can read more VWO case studies here.

If you want to learn more about optimisation, check out Qualaroo’s guide here.

If you want more on multivariate and A/B Testing, check out this resource from Optimizely

2 Communities and Customer Advisory Boards

Communities run on a wide range of tools - only some of which are designed specifically for research or feedback purposes. All these tools enable some form of iterative insight.

Customer engagement platforms

These are tools such as Lithium, Jive and GetSatisfaction - mainly used for customer forums or support hubs. UK mobile operator Giffgaff, for example, has built its ‘run by members’ approach on the Lithium platform and used it to co-create new services with users.

These communities can generate high volumes of customer comments - spontaneous and unstructured data that can be mined for insight with NLP / text analytics tools like Chattermill, Sentisum or Gavagai.

CRM platforms with survey tools

Feedback software can be used in conjunction with customer databases - either with custom integrations (e.g. SurveyMonkey with Hubspot, or Qualtrics with Salesforce); dedicated products (Zoho Surveys with the Zoho CRM app for SMEs); or through API connector tools like Zapier (eg SurveyGizmo with Pipedrive, or Typeform with Insightly).

For any organisation with good access to its own customer data, there are dozens of creative ways to build a feedback community using low cost components.

Surveys can be targeted at specific segments; customers can be progressively profiled over time; surveys can be shorter if you already have lots of the answers in the database.

Thread, the ‘personal stylist’ e-commerce fashion app, uses Typeform in this way; and Audible, the Amazon audiobooks app, has recruited a sub-set of users to its Sounding Board panel, which runs on Qualtrics.

Qualitative communities

Known sometimes as MROCs (Market Research Online Communities), these are more often shorter term (a few days to a few months) - although some do run continuously. They are used for co-creation, getting contextual understanding over time, or bringing niche groups of participants together.

Qualitative community software includes tools such as Together, Incling and Recollective.

Virgin One Hyperloop used the Liveminds platform for a 4-day community with 20 US participants to explore attitudes to the future of transportation.

“[We could] listen to real people in a private, personal and one-on-one way," says Leslie Horwitz, Strategic Communications Manager for Virgin One Hyperloop.

"This encouraged candour and avoided groupthink … it was powerful for us to hear stories from across the country."

Communities can also be used for non-commercial innovation insights. UNICEF used the Together platform for iterative co-creation of new initiatives with donors.

“[We are planning] to go back to our original community with worked up ideas to test,” says Anand Modha, Innovation Manager at UNICEF UK.

“It shows both the engagement [of] participants with the platform … and UNICEF’s confidence in them to provide the information we need to further develop our products."

Hybrid insight communities

Also referred to as custom panels or private panels, these tools usually run continuously, and share four main features: a light CRM for user management; a front-end CMS (Content Management System) for member engagement; survey tools; and some qualitative research functions - either basic discussion forums or more creative tools for marking up stimulus, whiteboarding ideas and moderating live chat sessions.

Vendors include Crowdtech, Vision Critical, Toluna Quick Communities, Fuel Cycle, and FlexMR.

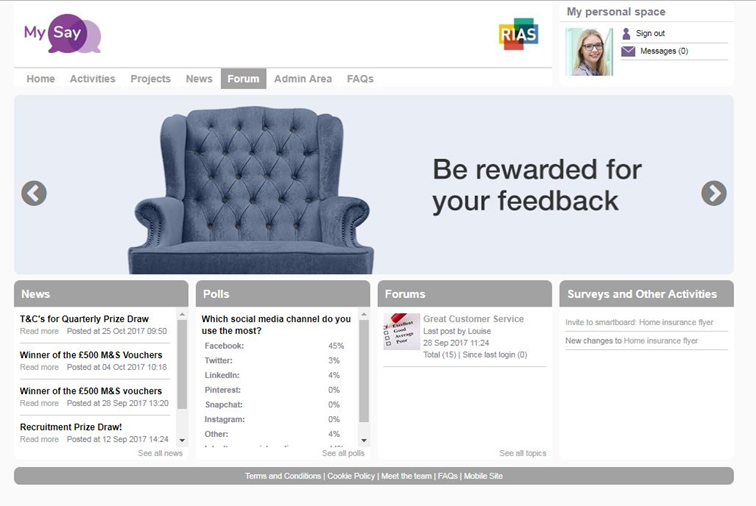

UK based Insurer Ageas built its MySay insight community using the FlexMR platform. Nearly 2,000 customers of its Rias direct insurance brand take part in frequent polls, surveys, discussions, usability tests and co-creation activities.

Community members help with early stage proposition development, testing and refinement; opportunities can then be sized using larger, representative market surveys.

The community is also used for qualitative user testing. Participants complete a series of online tasks on the Rias website and take part in simultaneous live chat sessions with members of the Ageas research team.

"The fact that we can keep dipping in and out is incredibly valuable,” says Dr Parves Khan, UK Research & Insights Lead at Ageas.

“The community makes it so much easier to involve customers in our decisions. Before, we’d have to request customer data, work with an external agency and wait weeks for results. Now I can answer questions the next day - and if something isn’t clear, it’s easy to go back to the community and ask them to clarify.”

3 Customer Experience (CX) Feedback Programmes

Customer satisfaction tracking used to be done through long surveys demanding exhaustive recall of experiences that customers couldn’t remember in language they didn’t understand.

Thankfully nobody does that any more*.

Today we have many better options for gathering feedback, using tools with iterative insight embedded in their design: feedback from customers is tracked; problems and issues are responded to directly through closed-loop tools; and targets are set to improve KPIs through these continuous cycles.

The innovators in this space have been the multichannel enterprise feedback platforms (Medallia, InMoment, MartizCX and others). These tools offer industrial strength solutions for complex use cases.

Mercedes-Benz, for example, uses Medallia to survey half a million sales and service customers each year across nearly 400 US dealerships. Over 5,000 MBUSA users have access to the system, which is positioned as an operational improvement capability - not just a set of feedback surveys.

Until recently, these approaches would have been out of reach to all but the largest enterprise customers.

But there are now much leaner approaches that rely on micro surveys (typically NPS) distributed through digital channels: platforms such as Wootric, AskNicely and promoter.io. Surveys are embedded in experience touchpoints; results are visualised in online reports; and even startups can deploy these tools for just a few dollars a month.

Social management app Hootsuite, for example, uses Wootric to track NPS. Feedback requests go via email, in-app or on-page; and customer comments are used to help drive the product roadmap.

Rackspace, one of the world’s largest cloud hosting businesses, uses promoter.io for its NPS surveys. Customer service teams can pick up and respond to any issues captured in the survey in near real time, and dashboards display metrics and comments as soon as they are captured.

4 User Experience Feedback Programmes

Where does customer experience end and user experience start? What’s the difference? It’s kind of an obscure branch of marketing disputation.

(And it’s made trickier by UX tool vendors who say they sell CX solutions … so their investors place them in a bigger market space).

But I’m using the Interaction Design Foundation’s distinction outlined here - UX is about a product or service-specific experience; CX is the cumulative experience of a company or brand.

UX is a component of CX.

I know not everyone will agree.

So how do UX feedback programmes deliver iterative insight?

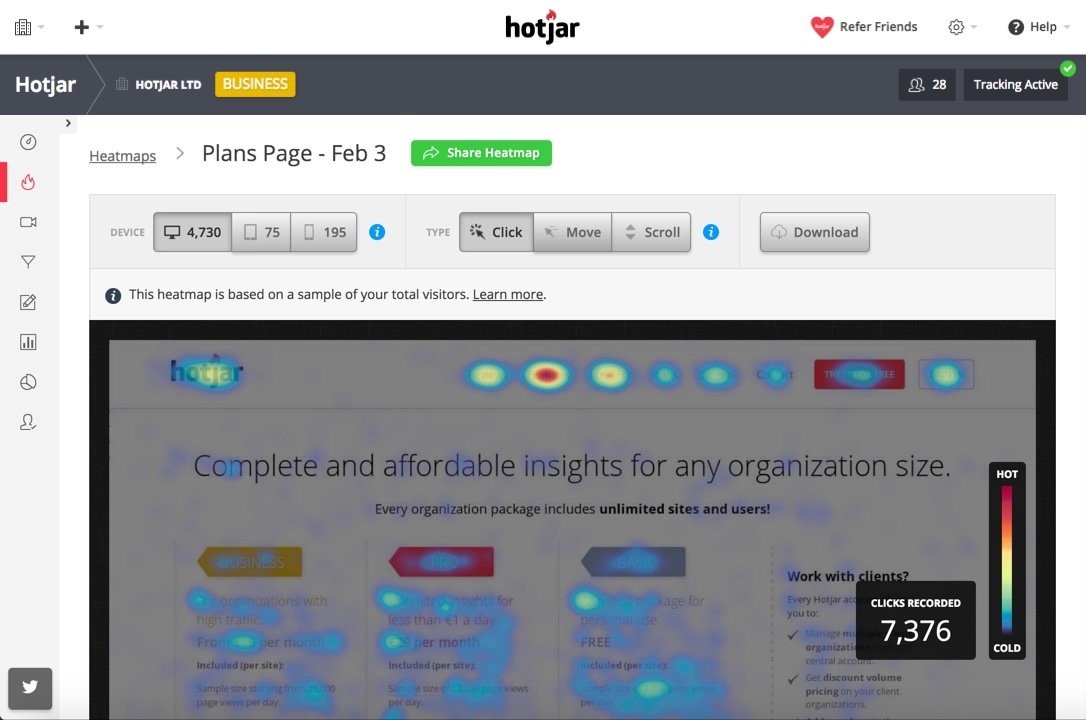

Session Replay and Heatmapping

Session replay tools are used to record how visitors use a website: they create videos of individual sessions, with each click, scroll and text entry captured. The aim is to find weak spots in the experience and signpost things that need improving; product development and support teams can search for session videos of specific users or segments that meet given criteria.

(BTW if you think this sounds a bit creepy, you’re not alone - see this article for more on the privacy implications).

In addition to videos, the tools generate aggregated outputs including heatmaps and click trails. When product teams make changes to a site or app, they can quickly see videos of user interactions; they can then see the cumulative effect in heatmaps.

Leading vendors include Hotjar, Fullstory and Crazy Egg.

Reed.co.uk, an online recruitment site, is a Hotjar user. One of the key advantages they see in session recordings is that it allows a reduction in face-to-face and remote user testing; it doesn’t just reduced costs - it also eliminates the interviewer and observer-expectancy effects.

In-page or In-app User Feedback

These need little explanation: side-tabs, popups, sliders, overlays, interstitials and whatever other not-at-all-annoying feedback requests get inserted into websites and apps.

Usabilla, Mopinion and Qualaroo are leaders in the field, although there are many more specialists and generalists offering similar tools.

Remote User Testing

Sometimes, session replays just won’t cut it.

Maybe the beta is too flaky to be A/B tested in the wild; or more likely, there’s a need for more context to a user’s behaviour.

User testing tools support remote usability interviews. These can be moderated or unmoderated; specific tasks can be given to participants (try and book a flight, find a specific product etc); session videos can be recorded along with audio of users narrating their experience; face to face video of users can also be captured in moderated sessions.

UserTesting, Usabilityhub and Loop11 all offer these features; participants can be intercepted on-site using tools like ethn.io or recruited from user testing panels like Testbirds or Testing Time.

Final Thoughts

To run a lean model, platforms that enable iterative insight are essential.

They make for much less friction in capturing, analysing and acting on consumer feedback:

- less friction on users and participants (easier to give feedback, behavioural data captured invisibly);

- less friction on insight and analytics teams (shorter timelines, lower incremental costs);

- less friction on agencies (smaller teams, lower overheads).

But these platforms also sit at the heart of some big disruptions in research and analytics:

- insight team structures are changing - read how Twitter, O2, eBay and others see those changes here

- the client-side skillset and toolbox is evolving - more on that here

- agencies need to re-think their business models.