OK, I’ll be honest: neuromarketing is a bit of a bucket category in the directory.

It includes lots of different techniques that are variously grouped under behavioural psychology, System 1 and emotionAI tools. And other fancy words.

All these things stem from a recognition that asking questions (in online surveys, interviews or focus groups) doesn’t always get to the truth.

We need tools that get behind our rationalised, socially conditioned responses. To find out what we really feel and mean. Our emotions. Our biases. Our reflexive responses.

Because everybody lies, apparently.

Maybe I’m lying right now and I just haven’t realised it*.

How could you tell?

For starters, you could try one of these methods.

Implicit Response Tests

Time how quickly I process a question to reveal my hidden biases.

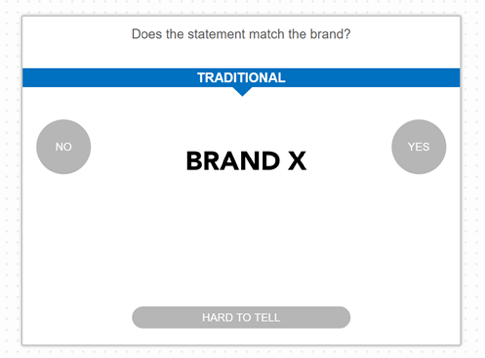

There are variations on this, but the most common online approach involves dragging or swiping a brand or a word to a positive or negative association on the screen.

For example …

- Show me a picture of a Marlboro pack

- Ask me to slide it to either ‘cool’ on one side of the screen or ‘stupid’ on the other

- Time how long it takes me to do that

- Benchmark that against the rest of my answers or the rest of the sample

- Then tell me I still have a lingering desire to smoke after 15 years of (mostly) not being stupid.

iCode (reviewed in full by Andy Buckley of Join the Dots here), CloudArmy Neuro and Sentient Prime have implicit test software that can be incorporated into online surveys.

Crowd Emotion has an implicit reaction test that combines with eye tracking and facial coding to build a composite picture of media engagement.

Mindsight Direct from isobar includes implicit tests in its model for eliciting unconscious emotional drivers.

Protobrand and Neuro-Flash also include implicit question types in their range of fully managed projects.

Voice Analytics

Record me talking and then analyse my intonation and breathing patterns to measure stress levels.

Beyond Verbal analyses raw vocal intonations to determine emotional valence, arousal and temper.

Invibe combines acoustic analytics (classifying valence and arousal in a speaker’s tone of voice) with language analytics (using NLP to decode the sentiment of transcribed conversations).

Cogito also has similar capabilities, but is used in call centres for sales and support teams rather than marketing projects.

Eyes and Face Analytics

Track my eye movements and facial expressions to reveal what I really feel.

Eye tracking with glasses has been around for a while, used extensively by retailers and brands that sell through grocery. Tobii is the main player for this type of hardware,

Hi-res under-screen cameras such as the Gazepoint system are also used for UX design and testing media.

Webcams are seen by many as good enough for most use cases, although they are still limited when high levels of precision are needed.

Sticky, Eyes Decide, Emotion Research Lab, Crowd Emotion and Affect Lab all offer webcam solutions for remote eye tracking.

And if you want expertise, solution providers like Lumen, Eye Square, Eye Tracker and Eye Zag can design and manage projects as well as supply the software and kit.

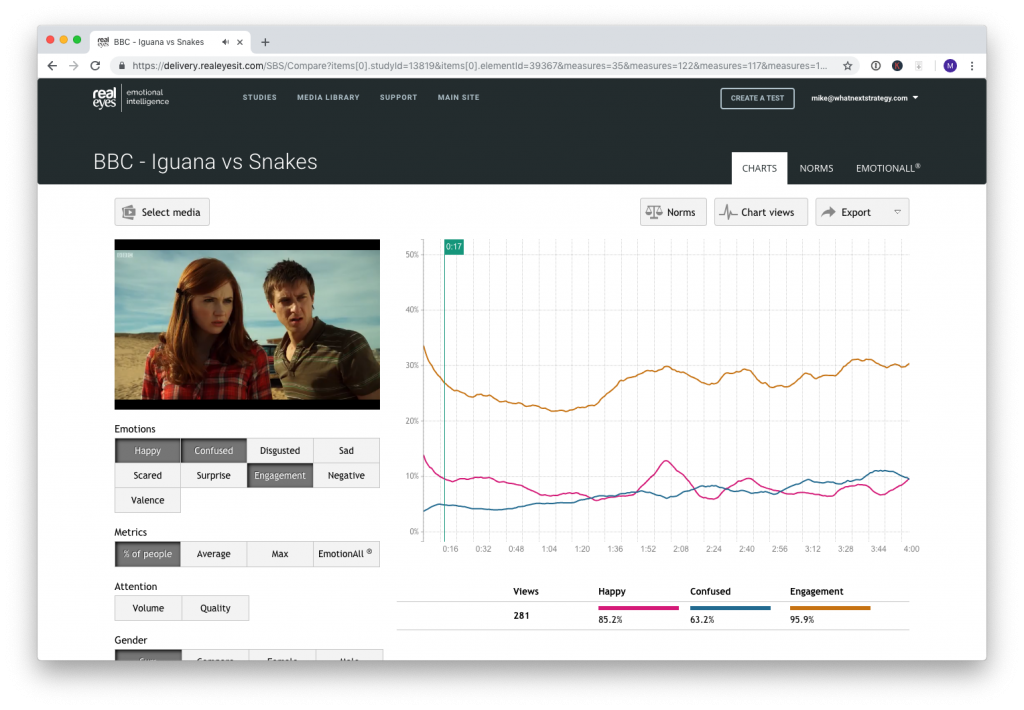

Facial coding has been widely adopted for testing video over the last few years.

A camera (usually a webcam nowadays) captures changes in ‘micro-expressions’ on a viewer’s face as they watch an ad, a trailer or even longer-form video.

Using machine learning, these are mapped to a model that classifies the expression into one of a handful of ‘core’ emotions (anger, disgust, fear, happiness, sadness, surprise … or some variant of these).

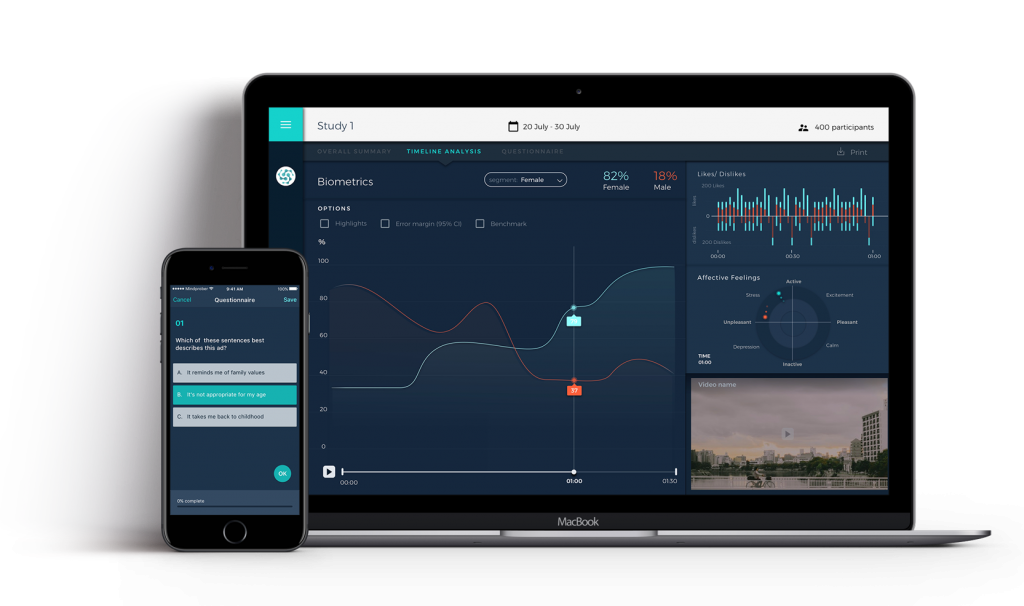

The outputs are usually a series of trace lines that show peaks or troughs of emotions mapped against time-stamps in the video:

Emotion Research Lab, Crowd Emotion, Realeyes, AffectLab and Affectiva all have facial coding solutions.

Heads up though – just to put the cat amongst the pigeons – if you’re using these models, it’s worth digging a little to understand exactly how they work. Don’t just rely on facial coding as the single source of truth – triangulate its results with other data sources.

Paul Ekman’s theory of ‘universal’ facial expressions – which underpins some facial coding models – has had a good kicking. Lisa Feldman Barrett’s brilliant book How Emotions are Made does a comprehensive take-down of it. You can see some of the arguments in this article if you don’t fancy the book.

Finger and Mouse Analytics

OK, this isn’t what most people call it.

But technically, you could figure out my emotional state from the way in which I use my smartphone or my mouse.

Chromo uses machine learning to measure user activation and valence levels from their touch gestures on smartphone screens. Emaww does a similar job.

Essentially, this means assuming that I’m cross if I stab the screen of my phone or shake it violently. Or I’m more relaxed if I gently slide my finger around.

And you can also try to infer users’ emotional states from their mouse movements.

One of Cooltool‘s features is mouse tracking, which helps to build a picture of behaviour on a given site.

Decibel Insight can also do this. Their report on ‘digital body language’ takes data from 2 billion user sessions to build an emotional model of online behaviour.

Biometrics

Or you could strap a device on me and measure my pulse rate, brain activity or electrodermal activity.

These methods used to be the preserve of wealthy marketing teams or university departments but are now much more accessible.

EEG measurements typically include cognitive state (asleep > drowsy > low engagement > high engagement) and workload (boredom > optimal > information overload).

GSR (Galvanic Skin Response) measures changes in sweat gland activity that are telltale signs of emotional arousal.

If you want to run your own studies, you can buy kit like the Emotiv EEG headsets or Shimmer GSR sensors for a few hundred dollars. Or you could work with Bitbrain‘s mobile neuromarketing labs.

Recent startup Mindprober has taken this online with its panel of biometric sensor-equipped respondents and self-service tools for project management and analysis. You can use it for testing ads, video or even live broadcasts.

Our you could work with a specialist agency like Neuro insight.

Tying it all together

But now you have all these different streams of data about me, how do you make sense of them?

AffectLab and Crowd Emotion integrate different feeds from eye tracking, facial coding, implicit tests and brain activity measurement.

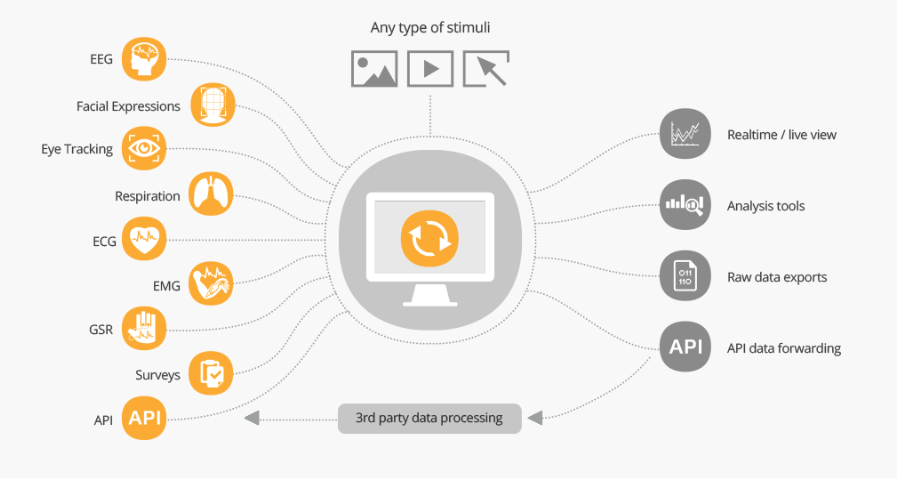

CoolTool‘s Neurolab can combine inputs from a wide range of lab-based and online tests.

Sensum integrates face, voice and biometric reads with contextual data (like ambient conditions).

And iMotions is a kind of universal combinator for all sorts of different neuro inputs:

This post has really just scratched the surface of what’s available in the world of neuromarketing / implicit / emotion research tools.

Clever startups are launching new ways to collect and analyse this data all the time; and features that were once highly specialist or very costly are now much more accessible.

But like any new field, a little knowledge can be a bit dangerous. it’s important to read around this topic before trusting entirely in a new method or piece of software. For all the smartness of these tools, the human brain is still an enormous mystery.

If you want some more depth on the methods and tools discussed here, iMotions has detailed guides to facial coding, eye tracking and EEG. Neuro Insight has some great articles and scientific research. And there are some good case studies over on the Sentient Decision Science blog.

*I’m not. At least not consciously.

But Everybody Lies by Seth Stephens Davidowitz is worth a read. It doesn’t take long, and has good stories about how data science and behavioural analytics get to insights that surveys won’t reveal. Apparently there are spikes in Google searches for racist jokes on Martin Luther King Day. Districts with these spikes were more likely to vote Trump in 2016.

Who would have guessed?

Great article! I work with a company (https://www.neuralexperience.io/) that does a lot of neuromarketing and it’s always interesting to see the methods of the different research tests.