In this Introduction to Text Analytics for Research, you’ll find some background on Natural Language Processing and then:

- 4 key concepts and some terminology you can drop into discussions with text analytics suppliers … so they don’t take you for a total noob

- 10 different uses for text analytics in research, social media listening and customer experience management

- 4 ways to get it done.

Natural Language Processing

NLP – Natural Language Processing – is an umbrella term for dozens of techniques and algorithms that have their origins in the 1950s.

The real world impact of these tools has grown exponentially over the last 20 years as NLP has benefited from massive advances in computing science, processing power and linguistics – as well as the explosion in available data for machine learning and model-building.

NLP powers hundreds of apps and services we take for granted: speech recognition, machine translation, search engine crawling and indexing, those ‘did you mean …’ corrections to our fat-fingered Googling, even auto-writing summaries of stock performance or research findings.

And the branch of NLP known as Natural Language Understanding powers the software we use in text analytics applications.

These applications process unstructured text to pick out keywords, identify entities, define concepts and understand sentiment.

Sounds simple.

Of course it’s not.

If you are buying or using tools for text analytics, it’s worth having a little more depth on how some of the magic happens – so you can constructively question what you’re being pitched, how the software works and whether it’s right for the job in hand.

4 key concepts

1. Syntactic & semantic analysis

Syntax is the way in which language is arranged. It defines the rules and structure, and syntactic analysis (also called parsing) identifies which elements in a sentence are nouns, verbs, adjectives etc.

Semantic analysis is all about the meaning that is conveyed.

I trained as a linguist, and I know how hard it is for humans to interpret ambiguity, irony, double meaning, context-dependency, cultural references, regional idioms … and that’s just in your native tongue.

Consider headlines like “Miners refuse to work after death” or “Fraudster gets nine months in violin case”. Training computers to work out the meaning behind complex sentences like these is a huge task.

2. Models and training

Machine learning (a feature of most NLP tools), really boils down to using data to answer questions.

This involves taking existing data (a training set) to create some rules (the model) that can be applied to new data to classify it or make predictions.

For example: you work for a wine brand. You want to know how often people post about wine in social media.

Your first step would be to work with a training set – a large enough extract of the total data – to label all the different ways in which people talk about drinking wine (grape varieties, slang terms, brands etc).

This is generally a manual task – painstakingly done by humans. Many suppliers outsource it to gig workers on Amazon’s Mechanical Turk, Freelancer.com or PeoplePerHour.

If you work in a niche category, existing models that have been built this way may not work for you – so budget for creating your own custom model.

3. Keyword and entity extraction

Keywords, terms and topics are the low-hanging fruit of text analytics: only very basic software is needed to compile a list of individual items and track changes in volume over time.

Entity extraction (AKA Named-Entity Recognition, or NER) is a little more complex. This classifies named entities into pre-defined categories such as the names of persons, companies, places etc.

This is particularly important if, say, you want to analyse blog posts or social media updates to work out how, when and where people may be consuming your brand.

NER systems annotate blocks of text, like this:

There are several standard frameworks in place; again, you may need something customised if your need is quite niche.

4. Sentiment analysis

Sentiment analysis tries to work out opinions expressed in text, including

- Polarity: whether the opinion is positive or negative. This might be simple good / bad or red/amber/green polarity; or it can be fine-grained on a 5 or 7 point scale.

- Subject: what is being talked about.

- Opinion holder: the person or entity with the opinion.

Sometimes sentiment analysis is straightforward. Often it’s not.

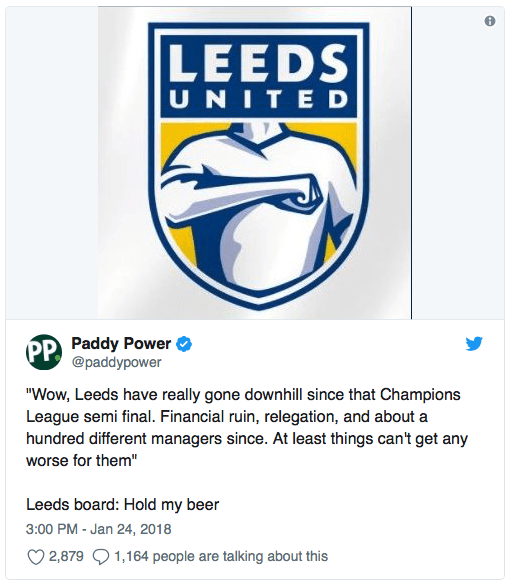

Pity the poor Leeds United social media team whose listening tools needed to understand whether people viewed their new club crest positively or negatively. How do you train a computer to read the sentiment in this tweet?

Fortunately there were enough unambiguous, context-free, non-ironic messages to help them come to a swift conclusion.

Fortunately there were enough unambiguous, context-free, non-ironic messages to help them come to a swift conclusion.

5. Bonus terms

These are not really important unless you want to get right into the weeds – but if you hear them, you don’t need to feel bamboozled.

- Stopword removal: taking out the bits of a sentence that don’t add much value (‘the’, ‘and’ etc)

- Stemming and lemmatisation: taking different forms of words back to their root (getting to ‘walk’ from ‘walking’, ‘walked’ etc)

- POS tagging and chunking: this is about recognising (tagging) Parts of Speech (POS) as nouns, verbs etc; and joining (chunking) terms that go together in a specific context (eg Latin America becomes “Latin America”).

There are doubtless dozens of others I’ve missed. People talk about phonology, morphology and other aspects of linguistic analysis … but honestly, I don’t know what they mean.

10 things you can do with Text Analytics in Research

OK, enough with the theory. Let’s get practical. A brief, unordered list of handy things that text analytics can do for researchers, product managers and CX people.

1. Understand the Customer Experience better

Many organisations now have streams of both asked-for and unsolicited feedback: online reviews, customer service tickets, product feedback, experience tracking surveys, transcribed audio of sales calls … it goes on and on.

All this unstructured data can now be processed, tagged, understood and tracked with NLP based tools.

2. Prioritise product feature requests

Most product teams receive continuous feedback and suggestions for improvements or new features.

Tools exist to capture some of this in a structured way – but much of it comes in as unstructured ad hoc user input, complaints or reviews.

Text analytics can be used to make sense of all of this and pick out the most-requested features.

3. Turbocharge qualitative research

Generally, machine learning needs large volumes of data, so there’s not much benefit in applying text analytics to a single focus group transcript or a handful of interviews.

But ‘qualitative’ research can sometimes generate scale: many product and UX teams carry out continuous streams of user interviews. If these are transcribed, they can be analysed.

Online discussions in forums or communities can also generate large volumes of unstructured data; some startups are even building tools around the automated analysis of ‘qualitative at scale’.

4. Squeeze more value out of your video assets

Recordings of usability interviews, narrated user tests, focus groups and other one-to-one interviews can now be captured in video research platforms and automatically transcribed. This makes their content both searchable and analysable.

5. Measure brand sentiment

This is one of the more common applications for text analytics – social media listening. Increasingly, it is being applied to verbatim responses in brand tracking surveys.

6. Understand emotions

Apparently 97% of what I’ve written here is emotionally driven.

Something like that.

Or maybe I’ve read the Behavioural Economics stuff wrong.

Anyway, there are both generic NLP and specific EmotionAI tools for decoding the emotional content of language. And these are not just being used for research – they are screening job candidates, insurance claimants and mental health patients.

7. Create automatic summaries of content

This one is more of a stretch: going beyond just analysis and using NLP tools to generate content.

Platforms like Narrative Science and Wordsmith are used to read stock market indicators and create investment summary notes that are largely indistinguishable from content written by people.

Zappi does the same to produce summaries of its automated survey projects.

8. Monitor trends

This will be much more familiar: social media listening tools scan billions of items of user-generated content to work out if things are on the up or on the wane.

It might be pretty simple stuff – tracking mentions of a keyword – or much more sophisticated, looking for patterns of meaning in conversations around a topic.

9. Analyse competitors

This is another use for social listening tools. But text analytics can also be used for much more focused competitive intelligence.

Competitors who post news updates, content marketing and placed media provide a rich source of data. All this material can captured using RSS feeds or scraped with simple tools. It can then be analysed for specific keyword triggers, or monitored for changes over time.

10. Code verbatim responses from surveys

This one is a feature of some of the other applications here – but also worth drawing out separately, given its potential (still) to save time and cost in survey research over manual coding.

4 ways to do text analytics

OK, now you have a cheat sheet of jargon and some cool applications.

How do you actually go about doing text analytics for research?

1. Use a service bureau

Simple. Outsource it to a specialist. Many of the firms who once did survey coding are now getting into the text analytics game.

2. Use an Excel plugin

A few software vendors have created versions of their tools that can run inside Excel. These are pretty handy if you don’t want commit to full software subscription.

3. Subscribe to a SaaS product

The directory has over 30 providers of SaaS text analytics tools, and I know I’ve missed quite a few. These vary in focus and complexity, but most include:

- automated input of regular text feeds (from tracking surveys, review platforms etc)

- custom model creation (or tweaking of existing models)

- self-service dashboards

- integrations with other software tools and data sources – either directly or through Zapier.

4. Connect via API

If you know what you’re doing or have access to a coder, many SaaS tools allow you to plumb directly into them.

In fact, several software tools have done just that themselves: the big cloud providers (Amazon AWS, Google Cloud and Microsoft Azure Cognitive Services) all have a suite of NLP tools that can be built into third party applications.

I hope this has been useful.

In a follow-up post, I’ll break out some examples of text analytics SaaS tools in different categories: CX analytics, EmotionAI, verbatim coding, social listening, multi (native) language capabilities and free/cheap tools.

Thanks for reading.

Further reading

Many of the SaaS platform blogs have educational excellent content. Check out the Thematic blog, Meaningcloud’s resources and white papers or MonkeyLearn’s comprehensive guides to Sentiment Analysis and intro to NLP.

The Towards Data Science magazine on Medium has some excellent intros to NLP, as well as other great content on machine learning and AI.

There is also a great introduction to NLP on Builtin.com

Here’s an example of the cloud APIs available for building your own solutions – from Microsoft Azure’s Cognitive Services.