Rise of the ‘attention robots’

Of late, some of our clients have asked us why we don’t use AI-enabled ‘attention visualisation’ systems. These systems claim to be able to predict what humans will look at within a visual scene based on what they call ‘bottom up’ processing. If you know that, say, that people tend to look at faces or areas of high colour contrast, then perhaps you can use AI to recognise when these elements appear on a website or in a film and then assume that people are going to look there.

Why bother going to the trouble of getting real people to do real eye tracking if the machine can predict where they are going to look anyway?

At Lumen, we think you need humans to help you understand human attention.

We don’t use these systems because:

- They can’t tell you anything you don’t already know

- They can never tell you anything about meaning

Understanding uncertainty and managing meaning are what we do at Lumen, and, as a result, we know that we need other humans to help us do both.

Tell me something I don’t already know

Psychologists have known for years that, all things being equal, people tend to look at faces, or edges or areas of high contrast and so on. When we collect data for our clients at Lumen we frequently see the same thing ourselves: there’s usually a big blob of attention on people’s faces, and ads with more colour contrast do seem to get more attention than ads without it. Attention, so some extent, is predictable.

The attention prediction algorithms take this insight further. They start by identifying visual features that they believe attract attention (known as ‘bottom up’ characteristics) and then associate a probability of each of them actually getting looked at. They then train an AI model to look for these characteristics in an image or video and apply the attention probabilities accordingly. The results are then validated using real eye tracking with real people, with the hope that, all things being equal, people actually look where the algorithm predicts they will look.

The promise of the technology is alluring. If the results look good, you have an instant heatmap of likely attention, without having to go to the trouble of recruiting real people.

Different scientists enumerate, order and weight the different ‘bottom up’ factors in different ways: some think there are ten basic features, others twelve, others think there are hundreds; some people think that faces are most important, others movement; some think that we consider faces first, then movement, while others think the decision tree works the other way round.

There is a big repository of competing algorithms collected by MIT/Tubingen which you can check out.

But there’s a problem.

The all these algorithms are only as good as assumptions that go into them. If you think that faces are most important, then you’ll give that most weight in your model, and the algorithm will give you lots of lovely heatmaps of attention on faces. If you are a corners kind of gal, then that’s what will turn up in you results. If movement is what you think drives attention, then that will be what your model will say drives attention. The model can’t tell you anything that you don’t tell it in the first place.

And if that’s the case, why bother with the model? Why not just have a factsheet that tells people ‘people probably look at faces first’ and be done with it? That would be even quicker – and certainly a lot cheaper – than going through the rigamarole of putting your ad through an ‘attention robot’.

Tell me something that means something

But there’s another problem. These algorithms can’t compute meaning. And meaning is really rather important. Sure, people look at faces. But some faces are much more attractive or interesting than other faces. More importantly, some faces are far more meaningful than other faces. You can stick a picture of me and a picture of Beyoncé in the paper, and we both know already which one will get more attention – but an algorithm doesn’t.

This problem of meaning is even more apparent when it comes to textual content. The meaning of the words makes a big difference as to whether or not they will be read.

To test this out, we did a side by side comparison of how people actually read a newspaper and how one of these Ai systems predicts they will look at. We asked 150 people to read a pdf copy of The Metro on their home computers, and then fed the same edition of the paper into one of the better-known attention prediction algorithms.

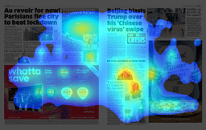

Now, to be fair, sometimes the AI came close to estimating what actually happens in the real world. In this example (Fig.1), there is a semblance of connection between prediction and reality. The AI obviously weights images as more important than text, and thinks areas of high colour contrast are attention grabbers, and so places ‘heat’ over these features – and it has a point. People do look there.

But it doesn’t understand that people also read the newspaper for the news, and so want to read the articles. Or that people read from left to right and top to bottom, and so misses out the attention that goes to the article on the lefthand side. Finally, it doesn’t understand that humans love pizza, and especially love deals on pizzas, which is the subject of the ad in the bottom right.

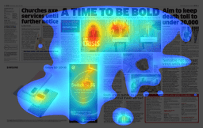

This problem of meaning can be seen to more dramatic effect in a different double page spread. We did this research at the height of the coronavirus outbreak, when people were scouring the newspapers for vital information on how to respond to the threat posed by the disease.

Usually, newspaper editors lay out their pages with the most important sections at the top of the page and in the biggest type – the sort of tying that you might think, one day, an algorithm could include in its estimate.

However, in this edition of The Metro, the subs put an explainer box, packed with useful information, at the bottom right-hand corner of the page.

How is a poor algorithm expected to know that sometimes humans find some blocks of text more interesting than other blocks of text? It thinks that people look at people, which is true (see the blobs of attention on Rishi Sunak and Boris Johnson – though what poor Patrick Vallance has done to earn the enmity of the algorithm I don’t know).

It also thinks that edges are important. It doesn’t realise that vital information about a health crisis trumps all the other factors, and so has entirely missed the attention that went to the explainer box.

Attention is not just driven by these ‘bottom up’ features. It is also – and primarily – driven by ‘top down’ factors. All things being equal, I am sure that we do notice faces and edges and blocks of colour in predictable ways. But all things are never equal.

We live within a sea of meaning – from which we can never escape. Everything is meaningful, at some level. Which means that trying to prise the meaning-free ‘bottom up’ factors from the meaning-rich ‘top down’ factors is meaningless.

‘Seeing’ and ‘seeing as’

To understand why ‘top down’ processes are more important than ‘bottom up’ processes, it is important to remember what vision is for.

We use our eyes to help us achieve ends in the world – avoid dangers and obstacles, attain goals and desires. When we look at the world, we impose a meaningful order on it – some things are significant, and something less so. The significance can change depending on our motivation. You barely register those car keys on the table until it’s time to leave the house to pick up the kids from school. Is that a Stop sign in the distance? It doesn’t matter until, well, it matters.

We ignore almost everything almost all the time, focussing our limited foveal resources on what matters to us right now.

But when we do find something significant or meaningful, we then invest our attention accordingly. That thing that could be a Stop sign in the distance? It’s so far away that it doesn’t matter right now. But we know that it could be important quite soon, so we invest some attention to investigate our hypothesis. Closer inspection confirms our suspicions and so we put our foot on the brake.

Two important things just happened in this little Stop sign story.

Firstly, we didn’t just ‘see’ the colours and edges of the sign – the ‘bottom up’ factors. We saw this collection of phenomena ‘as’ a Stop sign – something with significance and meaning.

Secondly, there was a process of perception. We thought that the sign might have meaning – and closer inspection showed that it did!

The great visual psychologist RL Gregory famously said that perceptions are hypotheses. We’re constantly guessing at what the meaning of different elements of visual scene might be, and then investing attention to confirm our hunches. But the hunch – the meaning – comes first. Sometimes we think of visual attention as if it was i-spy; but perhaps it’s more like Guess Who?

And this is why the ‘bottom up’ drivers so beloved of attention AI predictions cannot be separated from the ‘top down’ drivers. They are not independent. They are not even separate drivers. They are just mini-‘top down’ drivers.

Why are faces important? Because we are a social animal and lots of good things and some bad things can come from the other animals like us.

Why do edges get attention? Because they connote depth or difference which might be useful for us in the real world.

Why does movement matter? Because we want to be able to understand what is going on around us.

You need humans to understand human attention

Automated attention algorithms assume that human attention is built from the bottom up. This ‘computational’ theory of attention is attractive but flawed. The mind is not like a computer, frantically calculating the relative importance of different stimuli in realtime. Instead, the mind is a meaning machine, guessing and testing and learning and adapting to help it achieve our ends. Trying to abstract the meaning out of the process misses the point. It’s the meaning that drives the process.

This means that these ‘attention robots’ are useless. They can’t tell us anything that we didn’t already know. And they can’t tell us anything about meaning – which is the only thing that matters.

You need humans to understand human attention.

Find out more about Lumen or get in touch here.