Ten Trends in Research & Insights for 2024

By Insight Platforms

- article

- Market Trends

- Artificial Intelligence

- Generative AI

- Video Research

- Conversational AI

- Synthetic Respondents

- Data Visualisation

- Chatbots

These ten trends in research & insights were originally shared in this webinar about predictions for 2024.

Watch it here for more context, examples and videos:

Bonus: to help entertain you as you wade through the trends in this article, we’ve added a brief musical accompaniment to each one. Just play the video before you read each section.

And we’re very, very sorry for including Limahl.

The Ten Trends

1. Video Kills the Survey Star

One of our industry’s leading innovators, Jamin Brazil said that “nobody speaks in Likert Scales” in a recent podcast interview.

What does he mean?

He is describing the way in which quantitative survey research has been forced to adopt strange frameworks that don’t mirror the real world because of the inherent inflexibility of a survey.

Surveys were designed as a mechanism for capturing feedback at scale, so they substituted open discussions with closed questions to make data processing easier and cost-effective – initially with punch cards and latterly in other machine readable formats.

But today, we can gather feedback from thousands of people quickly and cheaply using video-at-scale. Ubiquitous smartphone ownership and a cultural shift – especially among younger generations who are much more willing to record and share videos – makes this easier to achieve. But the biggest leap has come from advances in AI, which enable accurate transcription, translation, and summarisation to surface insights from video.

So, video is no longer just a mechanism for gathering qualitative feedback from small samples. It can now be applied at massive scale and it’s relatively low-cost.

You can see some good examples of this in practice in these videos: Microsoft’s ChatGPT Video case study with Voxpopme, and with Knit’s case study with Mars.

Go deeper on this trend:

2. Computer Love

When a human conducts a research interview with another human, a degree of empathy is generated between the two individuals. Done well, the two people connect in a way that allows the participant to feel comfortable and reveal more about their thoughts, feelings, and behaviours.

This is very hard to replicate if you try to swap the human interviewer for a computer.

But it is getting easier and the rate of progress is startling.

In the Spike Jonze film Her, Joaquin Phoenix falls in love with his smartphone’s AI, voiced by Scarlett Johansson. There are countless other film and TV examples of people forming these empathetic bonds with software and robots. It’s known as the Eliza Effect: the way that we anthropomorphise technology by attributing human-like characteristics to artificial systems.

With the emergence of Large Language Models like ChatGPT, we are starting to see how AI tools can build authenticity, credibility and even emotional connection with people in ways that weren’t previously achievable.

For example, a recent article in Frontiers in Psychology documents an experiment in which 400 participants were asked to rate the answers to 50 well-known social dilemmas. Some were from professional advice columnists and some were generated by ChatGPT.

The AI-generated advice was better received than that given by the professional advice columnists.

“Chat GPT’s advice was perceived as more balanced, complete, empathetic, helpful and better than the advice provided by professional advice columnists.”

Other products built with LLMs are developing high levels of empathy from their users. Replika is an AI companion that people can use to configure virtual friends with whom they talk and develop relationships with over time.

It hit the headlines in 2023 when some users claimed they were falling in love and dating their AI girlfriends.

Character.ai is another AI companion platform that is incredibly popular: millions of daily users interact with the personas they create.

So how is this playing out in the world of research?

Historically, primary market and user research has involved a trade-off between scale (ie large surveys using quantitative data collection) and empathy (ie interviews, observations and group discussions at small scale.) It’s hard to achieve empathy with large-scale surveys; and it’s not practical to do thousands of in-depth interviews.

But AI tools are now being applied to conversational surveys and automated interview moderation – and starting to bridge this gap between scale and empathy. Tools such as inca, outset, tellet and others are able to engage participants in two-way dialogue and respond to the answers that they give and generate much better empathy.

With the rapid advances in text-video AI, we can expect to see these autonomous moderation tools blending with credible avatars for much more empathetic research at scale.

Go deeper on this trend:

3. Everything Connected

Let’s return briefly to the our face-to-face qualitative interview between a researcher and a participant.

The insights generated in these encounters don’t just come from the spoken words – the researcher will observe, analyse and interpret many other signals. Body language. Deep breaths and pauses. Eye movements. Environmental or contextual cues (in-home, in a workplace). Interactions with a physical or digital product.

In a world of AI-moderated interviews that rely solely on the content of language, none of these other sources of insight would be captured. But that is about to change.

In the ChatGPT 4-V release (V is for Vision), OpenAI introduced us to the concept of MultiModal AI. Now, you can upload images into your chat prompt and ask the model to analyse the content of those pictures.

I did just this with a photo of the inside of a kitchen store cupboard. The AI accurately described the products it could detect. I then asked it to classify the contents into a table with columns for product type, packaging type and brand – which it did instantly.

In the short term, this type of multimodal AI will make it far easier to analyse images from respondents, conduct diary studies, run mobile ethnographies, and much more.

Longer term, we will see AI tools that combine inputs from language, computer vision and other types of sensors to develop holistic insights – much closer to the skillset of our qualitative interviewer.

Indeemo, for example, already has the capability to recognise any text found in video using Optical Character Recognition, matching this input with transcribed text from audio narration.

Emotion AI tools such as Element Human and Affectiva can recognise facial expressions of participant engagement and combine these with the content of spoken feedback or survey responses.

Go deeper on this trend:

4. Fake Plastic People

Synthetic data has become one of the most widely discussed trends in research & insights.

It is used extensively in industries from robotics to healthcare and finance. It is used to test systems, avoid using sensitive persona data in regulated industries, and generate digital twins for scenario modelling.

In research, artificially generated and augmented data can now mimic data collected through surveys or qualitative research. Large Language Models simulate human responses in market research by creating virtual personas that represent different groups of respondents – allowing researchers to simulate scenarios and gather insights without relying on actual survey participants.

Healthcare research specialists Day One Strategy show how this can be used as part of a research process into rare diseases and health conditions.

Marketing Week columnist Mark Ritson has enthused about its potential and predicted widespread adoption.

And you can see Insight Platforms demos of early synthetic data innovators like Yabble, Synthetic Users and PersonaPanels.

But it’s also a provocative and seemingly controversial trend.

The UX Research industry has generally reacted very negatively to both the idea and the specific capabilities of synthetic personas.

Leaders of several research companies have also been vocal in highlighting the risks they see in synthetic data.

But whether you like it or not, synthetic data is here to stay and will be an important part of the research & insights mix going forward.

For more on the topic, watch this panel discussion on the Opportunities and Risks of Synthetic Data for Consumer Insights – part of the Demo Days Event in February 2024.

Go deeper on this trend:

5. Everybody’s Talking to Data

Data analytics will increasingly be mediated through conversational interfaces. Survey data analysis is no different.

ChatGPT has the Advanced Data Analysis capability for paid and Enterprise accounts, which allows you to upload a CSV datafile for analysis. With the right file structure, labelling and prompts, you can now use ChatGPT to analyse your survey data and answer questions framed in natural language.

- “Which concept was rated highest amongst affluent consumers?”

- “Which demographic group finds the ad most appealing?”

It’s easy to see how Large Language Models will help put survey and data analysis into the hands of more people. You won’t need to be a research expert to analyse data in this way.

An early leader in the application of LLMs to survey data analysis is Inspirient, and you can watch a Lightning Demo of their solution here.

6. Never-Ending Storytelling

Have you ever spent hours – or days – crafting the perfect PowerPoint deck to showcase your thinking?

This is about to get a lot easier.

Tools such as Beautiful.ai, Tome and Gamma use AI to generate slideshows, reports and infographics automatically using only text inputs.

The inputs can be a short chat prompt; a list of bullet points; a blog article; a long form Word document; pretty much any kind of text.

Over time, we can expect to see tools like this combined with, for example, platforms such as Displayr, Forsta Visualizations, or other research analysis tools that are better tailored to research data.

Combine the talk-to-data analytics capability of Trend #5 with the automated reporting and visualisation features of these tools, and you have rapid, intuitive research-based storytelling – based on simple text-based and conversational inputs.

Go deeper on this trend:

7. It’s a Race

It’s a cliché to say that today’s research processes run to ever shorter timelines. Clients are impatient. Stakeholders are worse. Researchers get whiplash from turning round projects so quickly.

Spoiler alert: it’s one of the trends in research & insights that will only get worse.

But at least there are new tools to help.

In the webinar version of this article, you can see a worked example of me turning my thoughts into a visual story in less than 20 minutes.

- 5 mins thinking time to structure my ideas

- 3 mins narrating those thoughts into the iPhone Voice Recorder

- 2 mins to upload the audio file, transcribe and download it

- 5 mins to paste the transcription into Notion; have NotionAI tidy, edit and improve the copy; then top and tail with title and headings

- 2 mins to upload the polished copy into Gamma and have it generate a slideshow (see below)

- 3 mins to make a cup of tea

So it’s not all doom and gloom.

In fact, getting things off your desk sooner might actually feel good.

It’s easy to imagine this process for rapid insights reporting based on (say) moderating discussions, interviewing users or conducting a co-creation workshop.

8. I Think I’m a Clone Now

Imagine creating a virtual version of you. This ‘you’ remembers all the useful knowledge you keep forgetting. It answers questions from stakeholders or clients while you’re on holiday. And it never gets impatient or frustrated when asked for something stupid.

‘Expert clones’ are already starting to emerge: LLMs that are trained on a body of expert knowledge (books, blog articles, podcast transcripts, recordings of classes etc).

Here is an example ProfG.ai, the bot version of Scott Galloway – a leading entrepreneur and Professor of Marketing at NYU Stern University. He is a well-known business and marketing pundit, author of several books, host of many podcasts, and writer of hundreds of blog posts.

All this content has been used to train the virtual Scott bot. At this stage, it’s more a proof of concept than a viable substitute for advice or consulting from the real Scott. But it is a good illustration of the potential for LLM ‘clones’ of research, insights, and data experts in the future.

Now, imagine replicating the most knowledgeable brand strategy or innovation expert in your agency; or creating a virtual insights team that can answer 50% of enquiries that come from stakeholders.

It’s coming.

9. Stakeholders are Doing it for Themselves

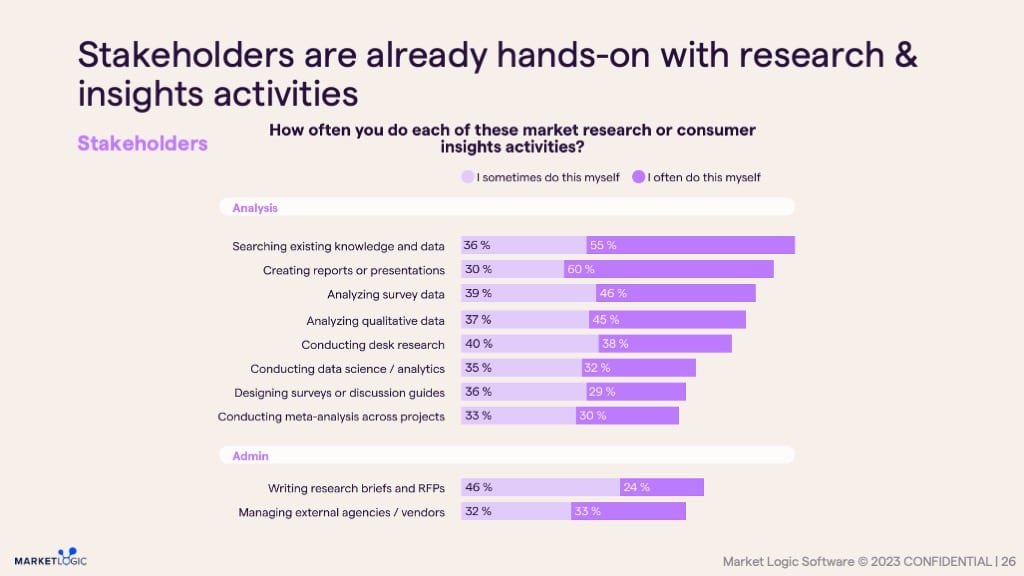

Non-experts are doing more and more of their own ‘DIY’ research and insights work. People who work in departments like Marketing, Sales, Strategy, Customer Operations and elsewhere (ie outside specialist insights or research departments) are using technology to conduct research, analyse data and work with existing data, insights or knowledge.

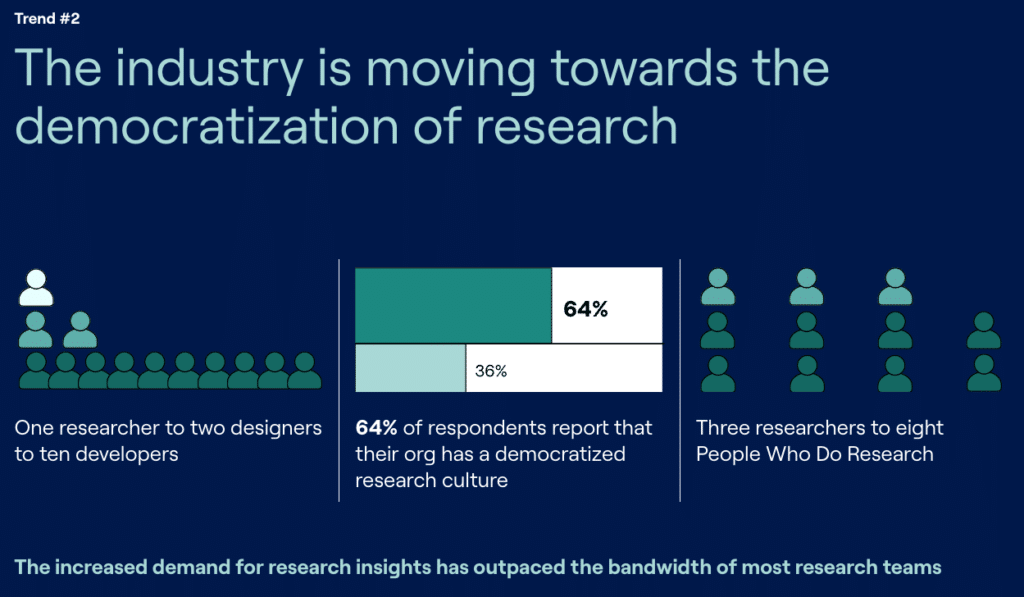

In the world of UX and Product Research, this model is firmly established. Designers, product managers, and even developers already outnumber expert researchers in the group of ‘People Who Do Research’ (PWDR). This report on Continuous Research Trends from Maze shows that, on average, for every three researchers, there are eight PWDR:

In the world of Consumer Insights and Market Research, the same trend is apparent. In late 2023, Insight Platforms partnered Market Logic Software to conduct a survey of both insights team members and their stakeholders in enterprise organisations (ie with more than 1,000 employees).

Download the report from this study here or watch the recording of the presentation here.

Increasingly, technology is helping non-experts to gather their own insights. This democratisation process will accelerate as more AI-powered tools put research, data and knowledge directly into the hands of people who need it.

Go deeper on this trend:

10. Happiness is Easy

A broader design and technology trend is finally taking root in the world or research tech applications. The drive towards simplicity and ease-of-use.

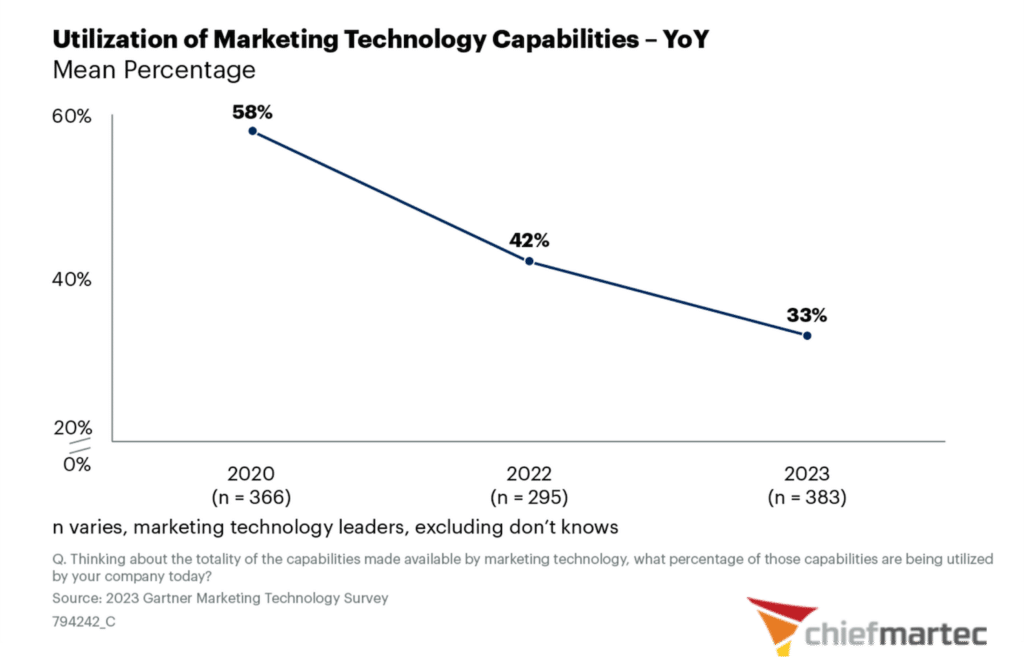

As users of marketing and research tech, we are overwhelmed by features and confused by complex interfaces. This is not a minor frustration: it is driving down the utilisation and perceived value from these solutions.

The Martech for 2024 report by ChiefMartec shows how utilisation of marketing technologies is dropping over time. It has fallen from around 60 % in 2020 to about 33 % in 2023.

We are using these tools less effectively in part because of poor interface design and complex overall user experience.

To counter this and drive more engagement, builders of research tech are streamlining, simplifying and stripping back their product designs.

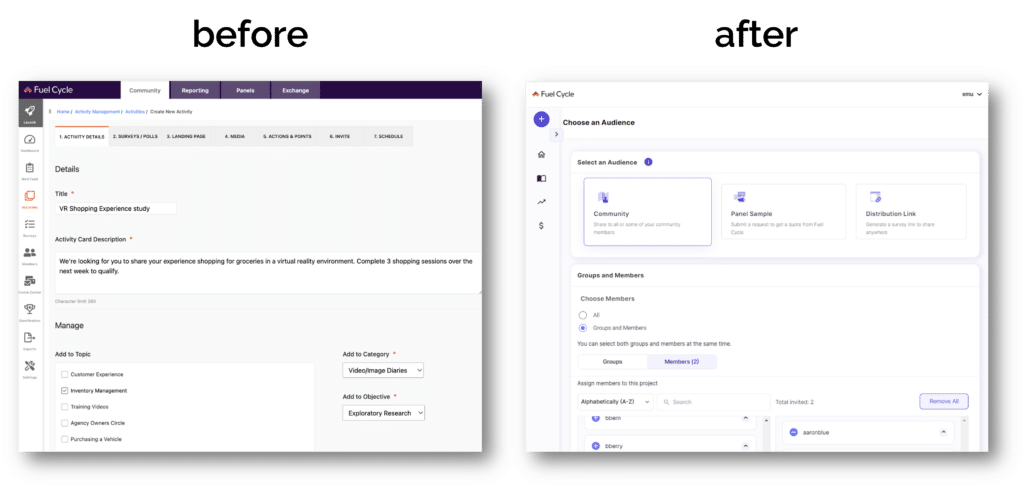

This example is from Fuel Cycle. Their UX overhaul led to a 70% reduction in the number of clicks and similar reduction in the time take to launch a project:

Final Thoughts on the Trends in Research & Insights for 2024

There’s a lot to unpack here, and I hope it’s not overwhelming.

But remember: the best way to stay up to date with all the key trends in research & insights is to get your regular dose from Insight Platforms.

Subscribe to emails and receive our weekly Research Tools Radar updates.

Join our Virtual Summits, Demo Days and Webinars.

And read all the great articles and ebooks from our own team and the industry’s leading innovators.